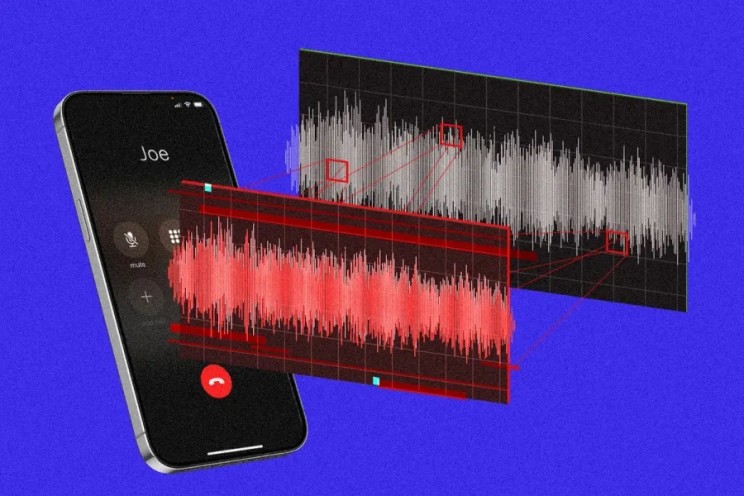

With rapidly improving AI, voice cloning has only gotten more convincing. That’s a problem

SOURCE: Tech BrewVoice calls imitating public figures or even relatives can have disastrous consequences. When some New Hampshire voters answered the phone in January, they heard a very familiar voice. “What a bunch of malarkey,” President Joe Biden said, urging residents to “save your vote for the November election” and skip the state’s Jan. 23 presidential primary. If you’re thinking that doesn’t sound like something Biden would actually say, you’d be right. New Hampshire officials now believe that the robocalls residents received were AI-generated, mimicking the president’s voice in an “unlawful attempt to…suppress New Hampshire voters,” the state AG’s office said in a statement. It’s the latest high-profile example of the fast-improving AI technology that can churn convincing audio clones for potentially nefarious purposes. And it’s not only being used to clone the voices of celebrities and public figures: Everyday people could find themselves as the victims—or targets—of a robocall clone campaign.